Like the polling debacle of 1992, 2015 offers an opportunity to innovate

May’s election results proved to be significantly different from the opinion polls. Since then, the leading polling organisations have devoted considerable energy to determining what went wrong and how their approaches can be improved. Following the publication of YouGov’s diagnosis, Anthony Wells discusses the main issues that were identified, possible solutions and the implications for the polling industry more broadly.

Credit: David Holt CC BY-SA 2.0

Last week YouGov put out their diagnosis of what what wrong at the election – the paper is summarised here and the full report, co-authored by Doug Rivers and myself, can be downloaded here. As is almost inevitable with investigations like this there were lots of small issues that couldn’t be entirely ruled out, but our conclusions focus upon two issues: the age balance of the voters in the sample and the level of political interest of people in the sample. The two issues are related – the level of political interest in the people interviewed contributed to the likely voters in the sample being too young. There were also too few over seventies in the sample because YouGov’s top age band was 60+ (meaning there were too many people aged 60-70 and too few aged over 70).

I’m not going to go through the whole report here, but concentrate upon what I think is the main issue – the problems with how politically interested people who respond to polls are and how that impacts on the age of people in samples. In my view it’s the core issue that caused the problems in May, it’s also the issue that is more likely to have impacted on the whole industry (different pollsters already have different age brackets) and the issue that more challenging to solve (adjusting the top age bracket is easily done). It’s also rather more complicated to explain!

People who take part in opinion polls are more interested in politics than the average person. As far as we can tell that applies to online and telephone polls and as response rates have plummeted (the response rate for phone polls is somewhere around 5%) that’s become ever more of an issue. It has not necessarily been regarded as a huge issue though – in polls about the attention people pay to political events we have caveated it, but it has not previously prevented polls being accurate in measuring voting intention.

The reason it had an impact in May is that the effect, the skew towards the politically interested, had a disproportionate effect on different social groups. Young people in particular are tricky to get to take part in polls, and the young people who have taken part in polls have been the politically interested. This, in turn, has skewed the demographic make up of likely voters in polling samples.

If the politically disengaged people within a social group (like an age band, or social class) are missing from a polling sample then the more politically engaged people within that same social group are weighted up to replace them. This disrupts the balance within that group – you have the right number of under twenty-fives, but you have too many politically engaged ones, and not enough with no interest. Where once polls showed a clear turnout gap between young and old, this gap has shrunk… it’s less clear whether it has shrunk in reality.

To give an concrete example from YouGov’s report, people who are under the age of twenty-five make up about 12% of the population, but they are less likely than older people to vote. Looking at the face-to-face BES survey, 12% of the sample would have been made up of under twenty-five, but only 9.1% of those people who actually cast a vote were under twenty-five. Compare this to the YouGov sample – once again, 12% of the sample would have been under twenty-five, but they were more interested in politics, so 10.7% of YouGov respondents who actually cast a vote were under twenty-five.

Political interest had other impacts too – people who paid a lot of interest to politics behaved differently to those who paid little attention. For example, during the last Parliament one of the givens was that former Liberal Democrat voters were splitting heavily in favour of Labour. Breaking down 2010 Liberal Democrat voters by how much attention they pay to politics though shows a fascinating split: 2010 Lib Dem voters who paid a lot of attention to politics were more likely to switch to Labour; people who voted Lib Dem in 2010 but who paid little attention to politics were more likely to split to the Conservatives. If polling samples had people who were too politically engaged, then we’d have too many LD=>Lab people and too few LD=>Con people.

So, how do we put this right? We’ll go into the details of YouGov’s specific changes in due course (they will largely be the inclusion of political interest as a target and updating age, but as ever, we’ll test them top to bottom before actually rolling them out on published surveys). However, I wanted here to talk about the two broad approaches I can see going forward for the wider industry.

Imagine two possible ways of doing a voting intention poll:

- Approach 1 – You get a representative sample of the whole adult population, weight it to the demographics of the whole adult population, then filter out those people who will actually vote, and ask them who they’ll vote for.

- Approach 2 – You get a representative sample of the sort of people who are likely to vote, weight it to the demographics of people who are likely to vote, and ask them who they’ll vote for.

Either of these methods would, in theory, work perfectly. The problem is that pollsters haven’t really been doing either of them. Lots of people who don’t vote don’t take part in polls either, so actually pollsters end up with a samples of the sort of people who are likely to vote, but then weight them to the demographics of all adults. This means the final samples of voters over-represent groups with low turnouts.

Both methods present real problems. May 2015 illustrated the problems pollsters face in getting the sort of people who don’t vote in their samples. However, approach two faces an equally challenging problem – we don’t know the demographics of the people who are likely to vote. The British exit poll doesn’t ask demographics, so we don’t have that to go on, and even if we base our targets on who voted last time, what if the type of people who vote changes? While British pollsters have always taken the first approach, many US pollsters have taken a route closer to approach two and have on occasion come unstuck on that point –assuming an electorate that is too white, or too old (or vice-versa).

The period following the polling debacle of 1992 was a period of innovation. Lots of polling companies took lots of different approaches and, ultimately, learnt from one another. I hope there will be a similar period now – to follow John McDonnell’s recent fashion of quoting Chairman Mao, we should let a hundred flowers bloom.

From a point of view of an online pollster using a panel, the ideal way forward for us seems to be to tackle samples not having enough “non-political” people. We have a lot of control over who we recruit to samples so can tackle it at source: we record how interested in politics our panellists say they are, and add it to sampling quotas and weights. We’ll also put more attention towards recruiting people with little interest in politics. We should probably look at turnout models too, we mustn’t get lots of people who are unlikely to vote in our samples and then assume they will vote!

For telephone polling there will be different challenges (assuming, of course, that they diagnose similar causes – they may find the causes of their error was something completely different). Telephone polls struggle enough as it is to fill quotas without also trying to target people who are uninterested in politics. Perhaps the solution there may end up being along the second route – recasting quotas and weights to aim at a representative sample of likely voters. While they haven’t explicitly gone down that route, ComRes’s new turnout model seems to me to be in that spirit – using past election results to create a socio-economic model of the sort of people who actually vote, and then weighting their voting intention figures along those lines.

Personally I’m confident we’ve got the cause of the error pinned down, now we have to tackle getting it right.

—

Note: This post represents the views of the author and not those of the Democratic Audit UK or the LSE. It originally appeared on UK Polling Report. Please read our comments policy before posting.

—

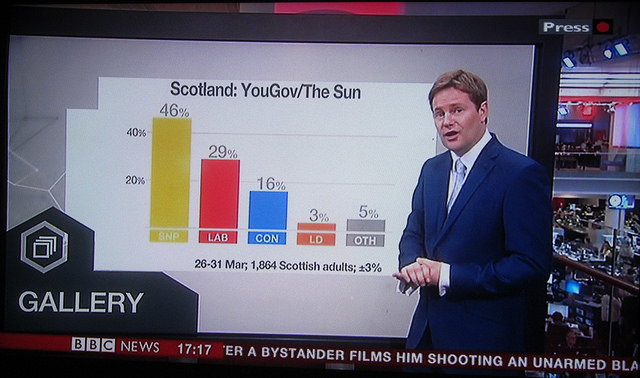

Anthony Wells is Director of YouGov’s political and social opinion polling and currently runs their media polling operation for the Sun and Sunday Times. He also runs the UK Polling Report website; an independent blog on opinion polling that is a widely used source for academics and journalists.

Democratic Audit's core funding is provided by the Joseph Rowntree Charitable Trust. Additional funding is provided by the London School of Economics.

Democratic Audit's core funding is provided by the Joseph Rowntree Charitable Trust. Additional funding is provided by the London School of Economics.

Like the polling debacle of 1992, 2015 offers an opportunity to innovate, writes @anthonyjwells https://t.co/Lqksuzaupn

Like the polling debacle of 1992, 2015 offers an opportunity to innovate, writes @anthonyjwells https://t.co/Ob13hGICKh

Like the polling debacle of 1992, 2015 offers an opportunity to innovate https://t.co/71ieV8DrbS

Like the polling debacle of 1992, 2015 offers an opportunity to innovate https://t.co/oB1AseYRmP

Like the polling debacle of 1992, 2015 offers an opportunity to innovate https://t.co/MMyke8yJ2H

Like the polling debacle of 1992, 2015 offers an opportunity to innovate https://t.co/086gXrNr5a https://t.co/ThDg3I02YP

Like the polling debacle of 1992, 2015 offers an opportunity to innovate https://t.co/ObFrtT4JG3 https://t.co/Lnjag89wA8

Like the polling debacle of 1992, 2015 offers an opportunity to innovate https://t.co/JTKKMs6wjL

Like the polling debacle of 1992, 2015 offers an opportunity to innovate https://t.co/gi8PP4I4mG https://t.co/1YHhRrPrFX

Like the polling debacle of 1992, 2015 offers an opportunity to innovate : Democratic Audit UK https://t.co/wpvkSLPrwP

Like the polling debacle of 1992, 2015 offers an opportunity to innovate : Democratic Audit UK https://t.co/oZTbDcMD8v

Like the polling debacle of 1992, 2015 offers an opportunity to innovate https://t.co/vTnbR2l1CX

Like the polling debacle of 1992, 2015 offers an opportunity to innovate https://t.co/7eIbHRoKJn https://t.co/S3mjtkolZu