Smartphone apps can be used to create a climate of local participation, but challenges remain

Smartphones are now ubiquitous, making it possible to connect with the internet permanently and without hindrance. But can they be used to create a climate of public participation? Dr Carolin Schröder and Anna Schuster used an app – Flashpoll – to test what does and doesn’t work, and found that various factors can influence how high participation is when it comes to their local home, work, and educative communities.

Credit: MIKI Yoshihito, CC BY 2.0

In the light of rather optimistic receptions of the potential of ICT for democracy, the fact that many devices are now portable can be considered as adding additional potential. Provided people have access to a smart phone or a tablet, and that they are online, the mobility of devices allows us to “amplify participation in a spatial and temporal dimension and will widen the range of possible uses for urban planning and design”.

If we follow this line of thought – when turning participation mobile there are fewer limits to participation in terms of time and location – this could also mean that mobile participation allows for a greater number of participants for increasing the availability of citizen information, and the capacity for deliberation and co-decision making. This triggered our interest – and this a short report on how we tested it developing a mobile app for citizen participation.

The FlashPoll mobile app

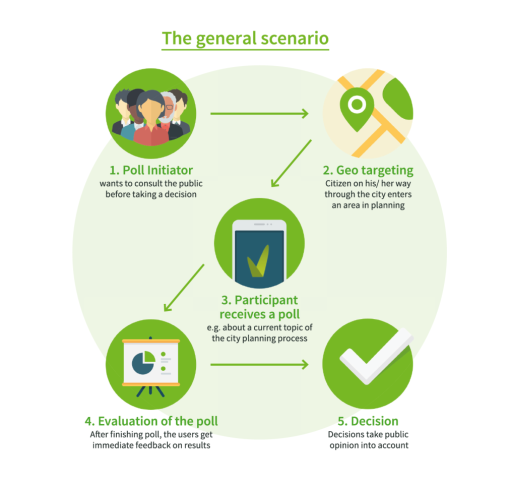

FlashPoll is designed for location-based polling and instant feedback on various issues that come up in an urban development context. At this development stage, the app can be downloaded for Android phones (An iOS version will be available this summer), and used via a web app. The geofence can be designed for each poll separately, and consequently limits the group of potential participants to those physically entering a specific area with their smartphones at least once during the poll. (This does not apply to the web app.) Answering a poll, on the contrary, can be done from anywhere and at any time during the duration of a poll. All results are only visible immediately after polling.

Graphic of the general scenario and example of the accumulated results in the mobile application. FlashPoll © 2014. All Rights Reserved.

Testing FlashPoll

While FlashPoll has been tested in several contexts already, here we will focus on three recent tests conducted on the campus of Technische Universität Berlin. These tests are aimed at providing insight in the relations of forms of announcement, the thematic focus of a poll, and users’ motivations to the actual participation rate. To inform potential participants about the opportunity to participate, various media and formats such as newsletters, social media, institute websites and small posters on bulletin boards on and near the campus were used, but their number and variety increased over time:

- The first poll asked students in an open way about their experiences on and perceptions of the campus. Response rate: 15 participants.

- The second test was conducted in collaboration with TU Berlin University Sports and asked for feedback on course-offer, sports facilities, user experience, popularity of the courses and reasons for (non-) participation. Response rate: 50 participants.

- The third test gathered user’s opinions about newly introduced break discs in the university library that should help regulate usage times of the library desks. Response rate: 660 participants.

Although after the tests we are still convinced by the potential of mobile apps for increasing participation, there are four very practical and crucial aspects to be considered that might challenge the perception of (e- and) m-participation as “amplifying participation in a spatial and temporal dimension”:

- (Public) Awareness is key to a successful poll: The very first poll was not announced officially. They were only available through the mobile app – with very low response rates. The second test was announced through a large mailing list (20.000 recipients) – with again low response rates. The third test, the library poll, was promoted through paper posters and leaflets. Additionally, the option to participate through the web link was announced – with the latter responsible for an impressive increase in the number of participants.

- Individual motivations to participate must be taken into consideration: After becoming aware of an opportunity to participate, another major challenge is whether the topic (or the question, the announcement or marketing slogan) is of individual interest. From the test results, we can only assume that this was the case. But why are people interested in answering these polls?

It certainly helps if the potential participants feel that their voice is being heard: while the first test did not mention any potential impact at all and the participation rates were very low, it only slightly increased in the second test, but definitely in the third, where it was made clear from the start that the commissioning institution is very much interested in the opinions of the users and that the outcome will influence the further use of break discs.

- Privacy is certainly an issue: Comparatively few people openly complained about privacy issues. Those that did questioned the need for location targeting on privacy grounds. But in other tests we got the impression that the initial step of downloading and installing an app is often considered an obstacle to individual participation. In consequence, the majority opted for answering the poll via a computer (81%), a smaller group answered via the web app on their smartphone (16%) and only 3% via the app on their smartphone.

- Location-based polling is context-related: While the first two tests were only announced through online channels, paper posters and leaflets had been put up for the third test right inside the library. This leads to assume that an immediate physical nearness of problem, (public) invitation and participation opportunity facilitates participation as 48% answered the poll immediately in the library, 38% outside after being informed via online media and just 14% after leaving the library.

Some further thoughts

While these results are only preliminary, there seem to be a relationship between the forms of announcement, the thematic focus of a poll, and users’ motivations. It should also be noted that it is difficult to tell which action caused which effect. But cautious conclusions from this research phase could be:

The additional opportunity to use the web app increased the number of participants. Keeping in mind that all iOS users and people without smartphones had to use the web app anyway, this gives a hint that combining online and mobile access to polls may lead to a larger number of actual participants than a stand-alone mobile app. The possibility of using a web app during a participation process seems even more important when it comes to privacy issues where transparent and clearly visible information about the use and necessity of the location data is essential to create trust and acceptance. Online participation opportunities seem to be perceived as less privacy-intruding than mobile solutions.

The number of participants is also higher if a test is marketed simultaneously through several online and offline media outlets. Similarly, participation numbers seem to increase if a test is coupled with a specific event or an ongoing face-to-face participation process – and if participants feel that there is a direct correlation to impact awareness.

In conclusion, FlashPoll does enable a specific form of public involvement, but it’s specific architecture seems to challenge the idea of spatial and temporal ubiquity. And if we transfer these intermediary findings to an urban planning context, the physical nearness of people, the problem or question posed, and physical space are all relevant for participation – even if the poll questions are not related to a specific physical space.

—

Note: Further information about FlashPoll can be found here. This article gives the views of the author, and not the position of Democratic Audit UK, nor of the London School of Economics. Please read our comments policy before commenting.

—

Dr Carolin Schröder is Head of the Participation Research Unit at the Technische Universität Berlin/ Technical University Berlin

Anna Schuster is a Researcher with Teaching Duties at the Technische Universität Berlin/ Technical University Berlin

![]() This post is based on a presentation given by Dr Schröder at the 2015 CeDem conference in Krems, Austria.

This post is based on a presentation given by Dr Schröder at the 2015 CeDem conference in Krems, Austria.

Democratic Audit's core funding is provided by the Joseph Rowntree Charitable Trust. Additional funding is provided by the London School of Economics.

Democratic Audit's core funding is provided by the Joseph Rowntree Charitable Trust. Additional funding is provided by the London School of Economics.

[…] This piece originally appeared on Democratic Audit. […]

But participation in what? If the only things people are asked about are relatively unimportant and trivial, wrapped up in local government jargon, then they will not want to take part other than in tiny numbers. Even if you give them a free cellphone, stand over them and pay them wads of cash to vote.

Rubbish in. Rubbish out. If everything of any importance is actually decided by higher authorities (like the EU, which is certainly not going to hand out half a billion phones and be bound by the results), then what is the point? People will not be fooled by diversionary voting over the colour of the coffee cups at City Hall when major decisions are being stripped from their parliaments by shadowy and unaccountable bodies within, say, the EU.

The problem with all this tinkering with systems of ‘engagement’ is that people want to be engaged with real power and real decisions. Not just trivia. Give them that and they will spend time thinking about it and discussing it, walk to a polling station and find time to engage. Fail to do so and you then have to force them to spend even 30 seconds on it.

The only way to force people to ‘engage’ in trivia in this way is at the point of the gun or telling them if they don’t take part in voting about rubbish they will get a criminal conviction. At the heart of it all is the ongoing stripping of the power of voters and the end of democracy (while the elite pretends that it is not happening).

[…] Labour could not have in any way caused a crash by overspending. Mail slams BBC Songs of Praise for filming Calais priest… then publishes his picture. Home – British Pharmacopoeia. Jowell vs Abbott. Paper cutting. All About Papercutting – A blog entirely dedicated to the art of papercutting. Smartphone apps can be used to create a climate of local participation, but challenges remain. […]

RT @democraticaudit Smartphone #apps can be used to create a climate of local participation – but challenges remain https://t.co/FYmsoTHkGg

Smartphone apps can be used to create a climate of local participation – but challenges remain https://t.co/du9B9DbHx5

Smartphone apps can be used to create a climate of local participation, but challenges still remain https://t.co/xATvLL4fBo

On @democraticaudit: ‘Smartphone apps can be used to create a climate of local participation, but challenges remain’ https://t.co/ZAf5BvyvOU

Smartphone apps can be used to create a climate of local participation, but challenges remain: Smartphones are… https://t.co/Fazt4jOyBR

Smartphone apps can be used to create a climate of local participation, but challenges remain https://t.co/1NIr9QSR9y

Smartphone apps can be used to create a climate of local participation, but … – Democratic Audit UK https://t.co/r6cbCOlKSS

Smartphone apps can be used to create a climate of local participation, but challenges remain https://t.co/kpMCisJ4DF #Option2Spoil

Smartphone apps can be used to create a climate of local participation, but challenges remain https://t.co/z31ko5abEb https://t.co/ZjawNWDN2J